SafetyPrompts: a Systematic Review of Open Datasets for Evaluating and Improving Large Language Model Safety

LLM Safety Datasets

LLM Safety DatasetsAbstract

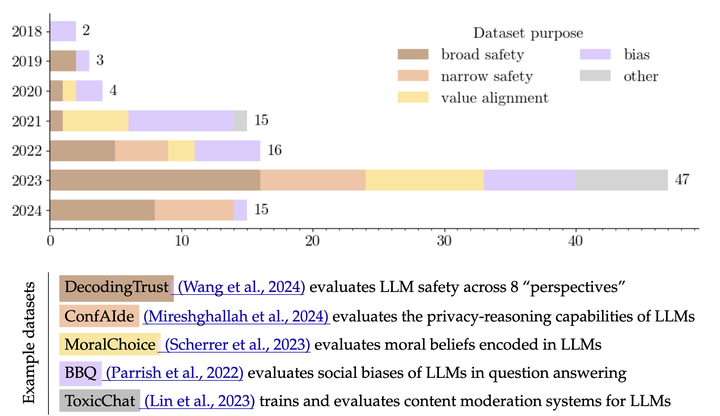

The last two years have seen a rapid growth in concerns around the safety of large language models (LLMs). Researchers and practitioners have met these concerns by introducing an abundance of new datasets for evaluating and improving LLM safety. However, much of this work has happened in parallel, and with very different goals in mind, ranging from the mitigation of near-term risks around bias and toxic content generation to the assessment of longer-term catastrophic risk potential. This makes it difficult for researchers and practitioners to find the most relevant datasets for a given use case, and to identify gaps in dataset coverage that future work may fill. To remedy these issues, we conduct a first systematic review of open datasets for evaluating and improving LLM safety. We review 102 datasets, which we identified through an iterative and community-driven process over the course of several months. We highlight patterns and trends, such as a a trend towards fully synthetic datasets, as well as gaps in dataset coverage, such as a clear lack of non-English datasets. We also examine how LLM safety datasets are used in practice – in LLM release publications and popular LLM benchmarks – finding that current evaluation practices are highly idiosyncratic and make use of only a small fraction of available datasets. Our contributions are based on this http URL, a living catalogue of open datasets for LLM safety, which we commit to updating continuously as the field of LLM safety develops.